Social Machinery

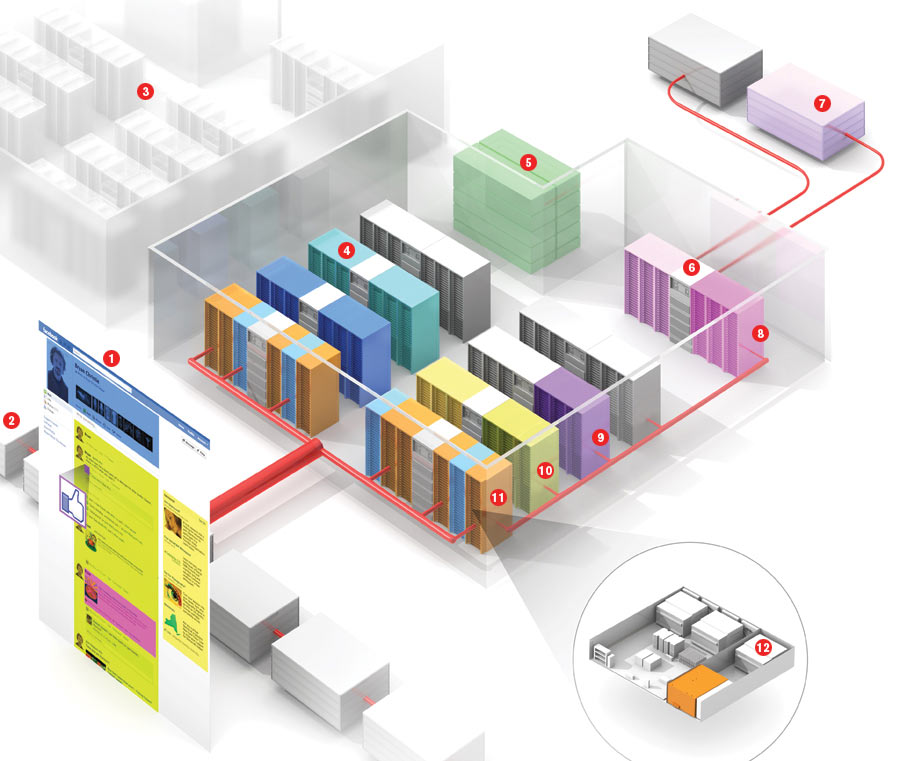

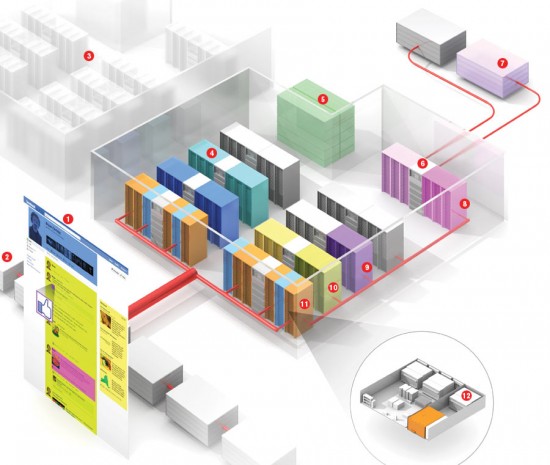

2. CONTENT DELIVERY NETWORK: Commercial services store and distribute pages to users so that data centers don’t become bottlenecked.

3. OTHER DATA CENTERS: Several data centers share information directly with one another, allowing for load balancing and rapid synchronization of user data.

4. PHOTOGRAPHS: The more than 100 million photos uploaded daily are stored in a distributed database called Haystack, developed by Facebook.

5. ELECTRICAL SUBSTATION: The electrical system distributes 277 volts to servers rather than the 208 or 120 volts typical for data centers. Conversion losses from the utility connection to the server are only 2 percent, rather than the typical 11 to 17 percent.

6. SECURITY: Connections from third-party sites are analyzed to guard against malware.

7. THIRD-PARTY PROVIDERS: Data feeds come from external sources, such as Zynga, which operates the popular FarmVille game.

8. FACEBOOK PLATFORM: Servers provide an interface for external developers to use in creating apps that can be integrated into Facebook pages or websites that work with the social network.

9. “LIKE” BUTTON: These servers track how users on Facebook—and a growing number of external sites—are using the “Like” button to signify interest in Web pages.

10. NEWS FEED: This is where posts from other users and notifications from applications are filtered and assembled into a chronological list.

11. WEB SERVERS AND CACHES: Data from the other servers in the center is assembled and formatted into the HTML that makes up a Web page in servers (orange) running PHP, an open-source scripting language. Frequently accessed content is cached in intermingled servers (blue) for rapid retrieval without overloading the internal infrastructure of the data center.

12. RACK SERVER: Prineville’s servers use a new design with a high-efficiency power supply. It can accept either 277 volts of AC power or backup DC power from battery cabinets that can each supply multiple servers.