Biotechnology

Cracking the Brain’s Codes

How does the brain speak to itself?

In What Is Life? (1944), one of the fundamental questions the physicist Erwin Schrödinger posed was whether there was some sort of “hereditary code-script” embedded in chromosomes. A decade later, Crick and Watson answered Schrödinger’s question in the affirmative. Genetic information was stored in the simple arrangement of nucleotides along long strings of DNA.

The question was what all those strings of DNA meant. As most schoolchildren now know, there was a code contained within: adjacent trios of nucleotides, so-called codons, are transcribed from DNA into transient sequences of RNA molecules, which are translated into the long chains of amino acids that we know as proteins. Cracking that code turned out to be a linchpin of virtually everything that followed in molecular biology. As it happens, the code for translating trios of nucleotides into amino acids (for example, the nucleotides AAG code for the amino acid lysine) turned out to be universal; cells in all organisms, large or small—bacteria, giant sequoias, dogs, and people—use the same code with minor variations. Will neuroscience ever discover something of similar beauty and power, a master code that allows us to interpret any pattern of neural activity at will?

At stake is virtually every radical advance in neuroscience that we might be able to imagine—brain implants that enhance our memories or treat mental disorders like schizophrenia and depression, for example, and neuroprosthetics that allow paralyzed patients to move their limbs. Because everything that you think, remember, and feel is encoded in your brain in some way, deciphering the activity of the brain will be a giant step toward the future of neuroengineering.

Someday, electronics implanted directly into the brain will enable patients with spinal-cord injury to bypass the affected nerves and control robots with their thoughts (see “The Thought Experiment”). Future biofeedback systems may even be able to anticipate signs of mental disorder and head them off. Where people in the present use keyboards and touch screens, our descendants a hundred years hence may use direct brain-machine interfaces.

But to do that—to build software that can communicate directly with the brain—we need to crack its codes. We must learn how to look at sets of neurons, measure how they are firing, and reverse-engineer their message.

A Chaos of Codes

Already we’re beginning to discover clues about how the brain’s coding works. Perhaps the most fundamental: except in some of the tiniest creatures, such as the roundworm C. elegans, the basic unit of neuronal communication and coding is the spike (or action potential), an electrical impulse of about a tenth of a volt that lasts for a bit less than a millisecond. In the visual system, for example, rays of light entering the retina are promptly translated into spikes sent out on the optic nerve, the bundle of about one million output wires, called axons, that run from the eye to the rest of the brain. Literally everything that you see is based on these spikes, each retinal neuron firing at a different rate, depending on the nature of the stimulus, to yield several megabytes of visual information per second. The brain as a whole, throughout our waking lives, is a veritable symphony of neural spikes—perhaps one trillion per second. To a large degree, to decipher the brain is to infer the meaning of its spikes.

But the challenge is that spikes mean different things in different contexts. It is already clear that neuroscientists are unlikely to be as lucky as molecular biologists. Whereas the code converting nucleotides to amino acids is nearly universal, used in essentially the same way throughout the body and throughout the natural world, the spike-to-information code is likely to be a hodgepodge: not just one code but many, differing not only to some degree between different species but even between different parts of the brain. The brain has many functions, from controlling our muscles and voice to interpreting the sights, sounds, and smells that surround us, and each kind of problem necessitates its own kinds of codes.

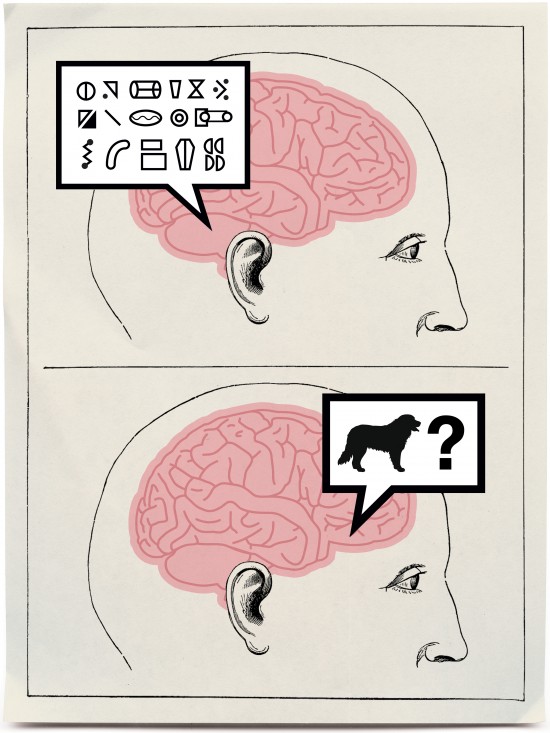

A comparison with computer codes makes clear why this is to be expected. Consider the near-ubiquitous ASCII code representing the 128 characters, including numbers and alphanumeric text, used in communications such as plain-text e-mail. Almost every modern computer uses ASCII, which encodes the capital letter A as “100 0001,” B as “100 0010,” C as “100 0011,” and so forth. When it comes to images, however, that code is useless, and different techniques must be used. Uncompressed bitmapped images, for example, assign strings of bytes to represent the intensities of the colors red, green, and blue for each pixel in the array making up an image. Different codes represent vector graphics, movies, or sound files.

Evidence points in the same direction for the brain. Rather than a single universal code spelling out what patterns of spikes mean, there appear to be many, depending on what kind of information is to be encoded. Sounds, for example, are inherently one-dimensional and vary rapidly across time, while the images that stream from the retina are two-dimensional and tend to change at a more deliberate pace. Olfaction, which depends on concentrations of hundreds of airborne odorants, relies on another system altogether. That said, there are some general principles. What matters most is not precisely when a particular neuron spikes but how often it does; the rate of firing is the main currency.

Consider, for example, neurons in the visual cortex, the area that receives impulses from the optic nerve via a relay in the thalamus. These neurons represent the world in terms of the basic elements making up any visual scene—lines, points, edges, and so on. A given neuron in the visual cortex might be stimulated most vigorously by vertical lines. As the line is rotated, the rate at which that neuron fires varies: four spikes in a tenth of a second if the line is vertical, but perhaps just once in the same interval if it is rotated 45° counterclockwise. Though the neuron responds most to vertical lines, it is never mute. No single spike signals whether it is responding to a vertical line or something else. Only in the aggregate—in the neuron’s rate of firing over time—can the meaning of its activity be discerned.

This strategy, known as rate coding, is used in different ways in different brain systems, but it is common throughout the brain. Different subpopulations of neurons encode particular aspects of the world in a similar fashion—using firing rates to represent variations in brightness, speed, distance, orientation, color, pitch, and even haptic information like the position of a pinprick on the palm of your hand. Individual neurons fire most rapidly when they detect some preferred stimulus, less rapidly when they don’t.

To make things more complicated, spikes emanating from different kinds of cells encode different kinds of information. The retina is an intricately layered piece of nervous-system tissue that lines the back of each eye. Its job is to transduce the shower of incoming photons into outgoing bursts of electrical spikes. Neuroanatomists have identified at least 60 different types of retinal neurons, each with its own specialized shape and function. The axons of 20 different retinal cell types make up the optic nerve, the eye’s sole output. Some of these cells signal motion in several cardinal directions; others specialize in signaling overall image brightness or local contrast; still others carry information pertaining to color. Each of these populations streams its own data, in parallel, to different processing centers upstream from the eye. To reconstruct the nature of the information that the retina encodes, scientists must track not only the rate of every neuron’s spiking but also the identity of each cell type. Four spikes coming from one type of cell may encode a small colored blob, whereas four spikes from a different cell type may encode a moving gray pattern. The number of spikes is meaningless unless we know what particular kind of cell they are coming from.

And what is true of the retina seems to hold throughout the brain. All in all, there may be up to a thousand neuronal cell types in the human brain, each presumably with its own unique role.

Wisdom of Crowds

Typically, important codes in the brain involve the action of many neurons, not just one. The sight of a face, for instance, triggers activity in thousands of neurons in higher-order sectors of the visual cortex. Every cell responds somewhat differently, reacting to a different detail—the exact shape of the face, the hue of its skin, the direction in which the eyes are focused, and so on. The larger meaning inheres in the cells’ collective response.

A major breakthrough in understanding this phenomenon, known as population coding, came in 1986, when Apostolos Georgopoulos, Andrew Schwartz, and Ronald Kettner at the Johns Hopkins University School of Medicine learned how a set of neurons in the motor cortex of monkeys encoded the direction in which a monkey moves a limb. No one neuron fully determined where the limb would move, but information aggregated across a population of neurons did. By calculating a kind of weighted average of all the neurons that fired, Georgopoulos and his colleagues found, they could reliably and precisely infer the intended motion of the monkey’s arm.

One of the first illustrations of what neurotechnology might someday achieve builds directly on this discovery. Brown University neuroscientist John Donoghue has leveraged the idea of population coding to build neural “decoders”—incorporating both software and electrodes—that interpret neural firing in real time. Donoghue’s team implanted a brushlike array of microelectrodes directly into the motor cortex of a paralyzed patient to record neural activity as the patient imagined various types of motor activities. With the help of algorithms that interpreted these signals, the patient could use the results to control a robotic arm. The “mind” control of the robot arm is still slow and clumsy, akin to steering an out-of-alignment moving van. But the work is a powerful hint of what is to come as our capacity to decode the brain’s activity improves.

Among the most important codes in any animal’s brain are the ones it uses to pinpoint its location in space. How does our own internal GPS work? How do patterns of neural activity encode where we are? A first important hint came in the early 1970s with the discovery by John O’Keefe at University College in London of what became known as place cells in the hippocampus of rats. Such cells fire every time the animal walks or runs through a particular part of a familiar environment. In the lab, one place cell might fire most often when the animal is near a maze’s branch point; another might respond most actively when the animal is close to the entry point. The husband-and-wife team of Edward and May-Britt Moser discovered a second type of spatial coding based on what are known as grid cells. These neurons fire most actively when an animal is at the vertices of an imagined geometric grid representing its environment. With sets of such cells, the animal is able to triangulate its position, even in the dark. (There appear to be at least four different sets of these grid cells at different resolutions, allowing a fine degree of spatial representation.)

Other codes allow animals to control actions that take place over time. An example is the circuitry responsible for executing the motor sequences underlying singing in songbirds. Adult male finches sing to their female partners, each stereotyped song lasting but a few seconds. As Michale Fee and his collaborators at MIT discovered, neurons of one type within a particular structure are completely quiet until the bird begins to sing. Whenever the bird reaches a particular point in its song, these neurons suddenly erupt in a single burst of three to five tightly clustered spikes, only to fall silent again. Different neurons erupt at different times. It appears that individual clusters of neurons code for temporal order, each representing a specific moment in the bird’s song.

Grandma Coding

Unlike a typewriter, in which a single key uniquely specifies each letter, the ASCII code uses multiple bits to determine a letter: it is an example of what computer scientists call a distributed code. In a similar way, theoreticians have often imagined that complex concepts might be bundles of individual “features”; the concept “Bernese mountain dog” might be represented by neurons that fire in response to notions such as “dog,” “snow-loving,” “friendly,” “big,” “brown and black,” and so on, while many other neurons, such as those that respond to vehicles or cats, fail to fire. Collectively, this large population of neurons might represent a concept.

An alternative notion, called sparse coding, has received much less attention. Indeed, neuroscientists once scorned the idea as “grandmother-cell coding.” The derisive term implied a hypothetical neuron that would fire only when its bearer saw or thought of his or her grandmother—surely, or so it seemed, a preposterous concept.

But recently, one of us (Koch) helped discover evidence for a variation on this theme. While there is no reason to think that a single neuron in your brain represents your grandmother, we now know that individual neurons (or at least comparatively small groups of them) can represent certain concepts with great specificity. Recordings from microelectrodes implanted deep inside the brains of epileptic patients revealed single neurons that responded to extremely specific stimuli, such as celebrities or familiar faces. One such cell, for instance, responded to different pictures of the actress Jennifer Aniston. Others responded to pictures of Luke Skywalker of Star Wars fame, or to his name spelled out. A familiar name may be represented by as few as a hundred and as many as a million neurons in the human hippocampus and neighboring regions.

Such findings suggest that the brain can indeed wire up small groups of neurons to encode important things it encounters over and over, a kind of neuronal shorthand that may be advantageous for quickly associating and integrating new facts with preëxisting knowledge.

Terra Incognita

If neuroscience has made real progress in figuring out how a given organism encodes what it experiences in a given moment, it has further to go toward understanding how organisms encode their long-term knowledge. We obviously wouldn’t survive for long in this world if we couldn’t learn new skills, like the orchestrated sequence of actions and decisions that go into driving a car. Yet the precise method by which we do this remains mysterious. Spikes are necessary but not sufficient for translating intention into action. Long-term memory—like the knowledge that we develop as we acquire a skill—is encoded differently, not by volleys of constantly circulating spikes but, rather, by literal rewiring of our neural networks.

That rewiring is accomplished at least in part by resculpting the synapses that connect neurons. We know that many different molecular processes are involved, but we still know little about which synapses are modified and when, and almost nothing about how to work backward from a neural connectivity diagram to the particular memories encoded.

Another mystery concerns how the brain represents phrases and sentences. Even if there is a small set of neurons defining a concept like your grandmother, it is unlikely that your brain has allocated specific sets of neurons to complex concepts that are less common but still immediately comprehensible, like “Barack Obama’s maternal grandmother.” It is similarly unlikely that the brain dedicates particular neurons full time to representing each new sentence we hear or produce. Instead, each time we interpret or produce a novel sentence, the brain probably integrates multiple neural populations, combining codes for basic elements (like individual words and concepts) into a system for representing complex, combinatorial wholes. As yet, we have no clue how this is accomplished.

One reason such questions about the brain’s schemes for encoding information have proved so difficult to crack is that the human brain is so immensely complex, encompassing 86 billion neurons linked by something on the order of a quadrillion synaptic connections. Another is that our observational techniques remain crude. The most popular imaging tools for peering into the human brain do not have the spatial resolution to catch individual neurons in the act of firing. To study neural coding systems that are unique to humans, such as those used in language, we probably need tools that have not yet been invented, or at least substantially better ways of studying highly interspersed populations of individual neurons in the living brain.

It is also worth noting that what neuroengineers try to do is a bit like eavesdropping—tapping into the brain’s own internal communications to try to figure out what they mean. Some of that eavesdropping may mislead us. Every neural code we can crack will tell us something about how the brain operates, but not every code we crack is something the brain itself makes direct use of. Some of them may be “epiphenomena”—accidental tics that, even if they prove useful for engineering and clinical applications, could be diversions on the road to a full understanding of the brain.

Nonetheless, there is reason to be optimistic that we are moving toward that understanding. Optogenetics now allows researchers to switch genetically identified classes of neurons on and off at will with colored beams of light. Any population of neurons that has a known, unique molecular zip code can be tagged with a fluorescent marker and then be either made to spike with millisecond precision or prevented from spiking. This allows neuroscientists to move from observing neuronal activity to delicately, transiently, and reversibly interfering with it. Optogenetics, now used primarily in flies and mice, will greatly speed up the search for neural codes. Instead of merely correlating spiking patterns with a behavior, experimentalists will be able to write in patterns of information and directly study the effects on the brain circuitry and behavior of live animals. Deciphering neural codes is only part of the battle. Cracking the brain’s many codes won’t tell us everything we want to know, any more than understanding ASCII codes can, by itself, tell us how a word processor works. Still, it is a vital prerequisite for building technologies that repair and enhance the brain.

Take, for example, new efforts to use optogenetics to remedy a form of blindness caused by degenerative disorders, such as retinitis pigmentosa, that attack the light-sensing cells of the eye. One promising strategy uses a virus injected into the eyeballs to genetically modify retinal ganglion cells so that they become responsive to light. A camera mounted on glasses would pulse beams of light into the retina and trigger electrical activity in the genetically modified cells, which would directly stimulate the next set of neurons in the signal path—restoring sight. But in order to make this work, scientists will have to learn the language of those neurons. As we learn to communicate with the brain in its own language, whole new worlds of possibilities may soon emerge.

Christof Koch is chief scientific officer of the Allen Institute for Brain Science in Seattle. Gary Marcus, a professor of psychology at New York University and a frequent blogger for the New Yorker, is coeditor of the forthcoming book The Future of the Brain.