Artificial Intelligence / Machine Learning

A new set of images that fool AI could help make it more hacker-proof

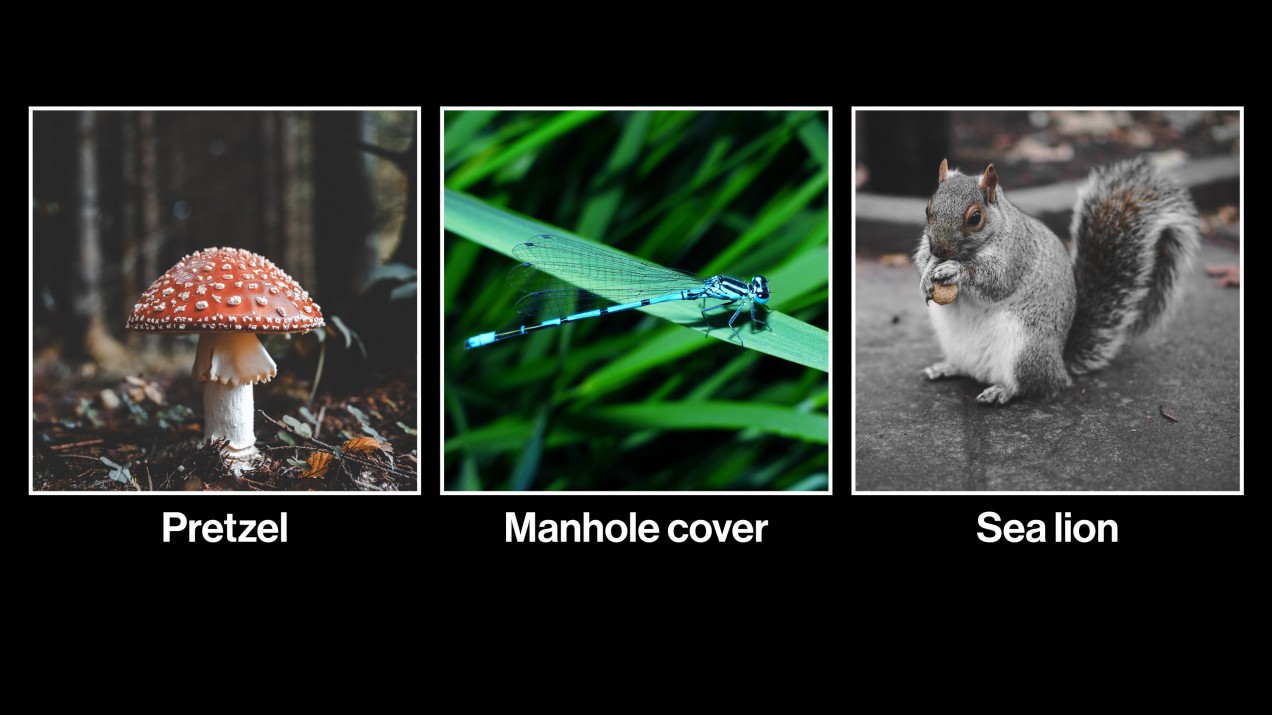

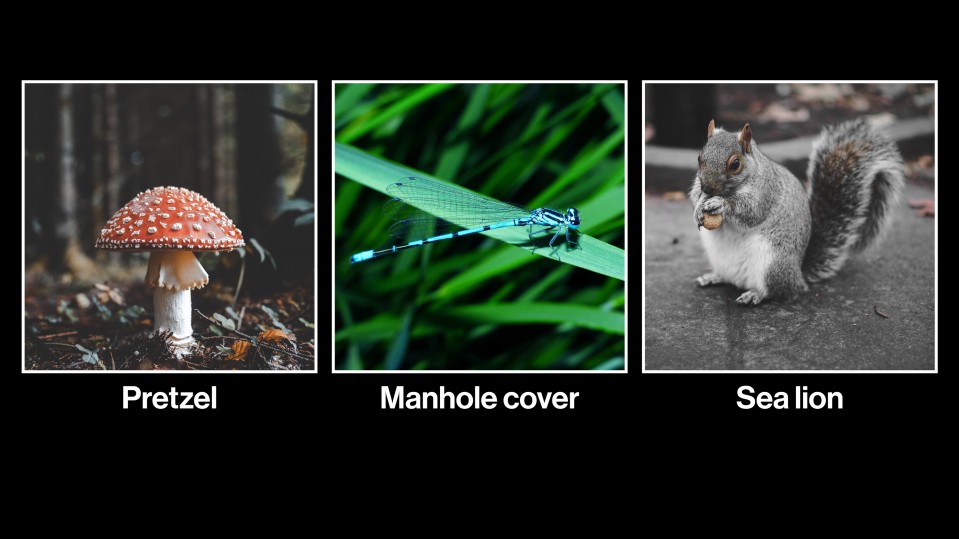

Squirrels mislabeled as sea lions and dragonflies confused with manhole covers are challenging algorithms to be more resilient to attacks.

Artificial intelligence is great at identifying objects in images, but it’s still pretty easy to mess it up. Add a few choice strokes or layer in some static noise invisible to the human eye, and you can throw off an image recognition system, sometimes to deadly effect. Adding stickers to a stop sign can make a self-driving car believe the sign is posting a 45-mile-per-hour speed limit, for example, while adding them to a road can make a Tesla swerve into the oncoming traffic lane. (On the bright side, the same techniques can also shield you from the surveillance state. You win some, you lose some.)

All of these are known as adversarial examples—and researchers are now scrambling to develop ways to protect AI systems from them. But in a paper last year, a group of researchers at Google Brain and Princeton, including one of the earliest researchers on this topic, Ian Goodfellow, argued that the emerging scholarship was too theoretical and missed the point.

While the bulk of the research focused on protecting systems from specially designed perturbations, a hacker, they said, would be likely to choose a blunter tool: a completely different photo rather than a noise pattern to layer on an existing one. This, too, could cause the system to misbehave.

The critique prompted Dan Hendrycks, a PhD student at the University of California, Berkeley, to compile a new image data set. He calls the images it contains “natural adversarial examples”—without any special tweaks, they fool a system anyway.

They include things like a squirrel that common systems mislabel as a sea lion, or a dragonfly that they misidentify as a manhole cover. “These examples appear much harder to defend against,” he says. Synthetic adversarial examples need to know all of the AI system’s defenses to be most effective. In contrast, natural examples can work pretty well even when those defenses change, he says.

Hendrycks released an early version of the data set, with around 6,000 images, last week at the International Conference on Machine Learning. He plans to release a final version with close to 8,000 in a couple of weeks. He intends the research community to use the data set as a benchmark.

In other words, rather than train image recognition systems directly on the images, they should reserve them only for testing. “If people were to just train on this data set, that’s just memorizing these examples,” he says. “That would be solving the data set but not the task of being robust to new examples.”

Cracking the logic behind the sometimes baffling errors the examples cause could lead to more resilient systems. “How is this confusing a dragonfly for guacamole?” Hendrycks jokes. “It’s not too clear why the mistake is being made at all.”