Artificial Intelligence / Robots

A robot puppet can learn to walk if it’s hooked up to human legs

Robots might be able to navigate unfamiliar environments if they copy what we do.

Humans don’t need to have seen a set of stairs before in order to know what it is—or how to climb them. But for a robot, they can present an insurmountable problem.

Getting robots to mimic how we manage to move around so effortlessly is one potential solution. That’s the premise of a study by researchers from the University of Illinois and MIT published in Science Robotics today.

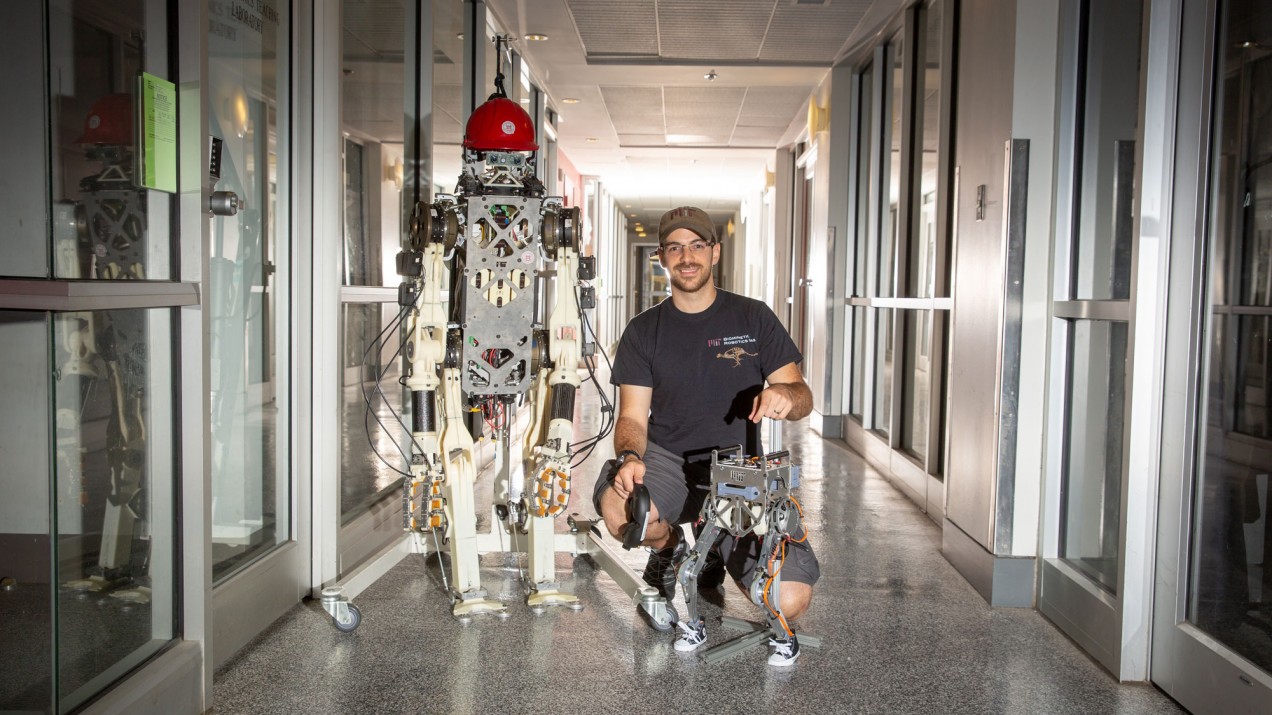

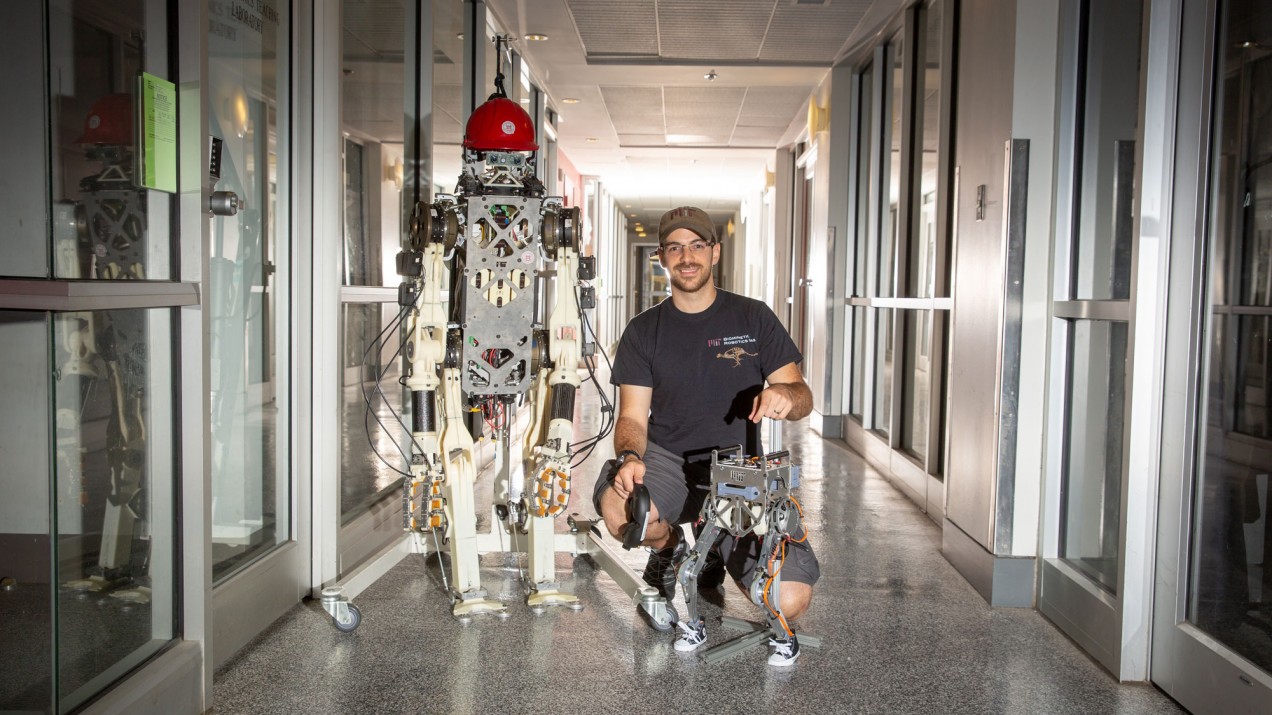

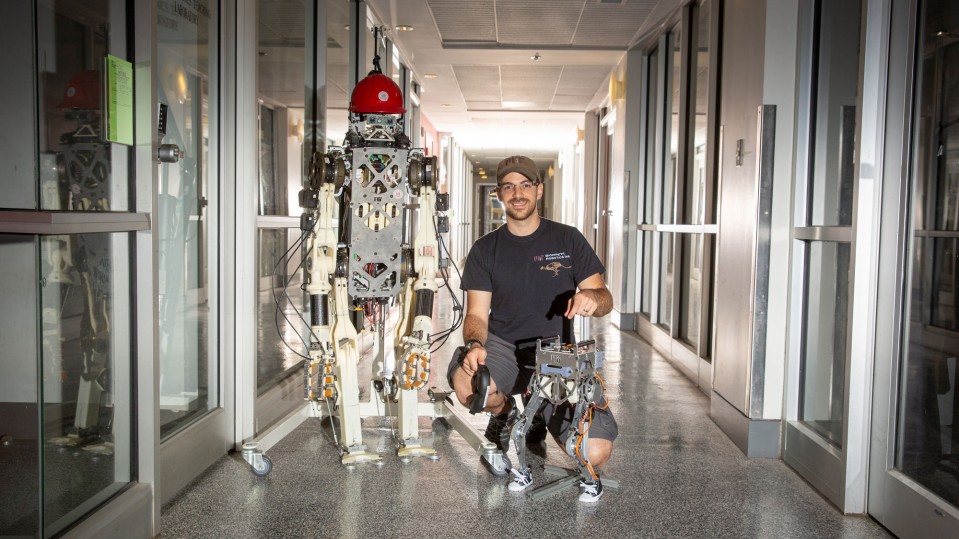

They have created a human-machine interface that maps an operator’s movements onto a robot. It works by tracking the movements (jumping, walking, or stepping) as the operator’s feet move on a plate equipped with motion sensors. The system also tracks the movements of the operator’s body, using a vest also wired up with sensors. The data captured from the torso and legs is then mapped onto a two-legged robot (specifically, a smaller version of the Hermes robot developed by MIT).

The system works both ways: it also allows the operator to “feel” what the robot is feeling. If it bumps into a wall, or gets nudged, that sensation is transmitted back to the person at the other end via tactile feedback. This lets the person adjust accordingly, applying more or less pressure as required. This feedback includes safety measures that automatically cut out power if the robot experiences dangerous levels of force, according to João Ramos, an assistant professor at the University of Illinois and coauthor of the paper.

The current set-up is pretty basic at the moment. It requires a lot of wiring, has some communication delays, and captures only some quite simple movements. It is also limited to specific tasks, rather than being a generalized system for all movements. However it’s a step toward more mobile, and useful, robots.

“Getting robots to move autonomously is the biggest challenge in robotics. This neatly sidesteps that, using the power of the human mind to take in sensory information about the world, process that, then relate to a control system for tasks like balancing or stepping,” says Mike Mistry, who studies robotics at the University of Edinburgh and was not involved in this study.

Being virtually hooked up to a human could help robots respond to disasters or other situations that would put human responders’ lives at risks. The researchers say that a system like this could be used to help in robotic clean-up operations such as the one after the Fukushima Daiichi nuclear power plant disaster in Japan in 2011. Humans could have guided robots to navigate around the site more accurately, from a safe distance. And while there’s currently no machine learning involved in the process, Ramos believes the data captured from the system could be used to help train autonomous robots.

“In 50 years we will have fully autonomous robots. But human control offers a bunch of potentials we haven’t explored yet, so in the meantime, it makes sense to combine robots and humans to make the best use of both,” he said.