Artificial Intelligence / Robots

An ever-changing room of Ikea furniture could help AI navigate the world

The Allen Institute wants to crowdsource navigation algorithms by letting researchers turn their robots loose in its physical and virtual apartments.

In a building across from its main office in Seattle, the Allen Institute for Artificial Intelligence (AI2) has enough Ikea furniture to configure 14 different apartments. The lab isn’t going into interior design—not exactly. The resources are meant to train smarter algorithms for controlling robots.

Household robots like the Roomba function well only because their tasks are relatively simple. Meandering around, doubling back, and returning to the same spots over and over don’t really matter when the objective is to relentlessly clean the same floor.

But anything that requires more efficient or complex navigation still trips up many state-of-the-art robots. The research needed to improve this status quo is also expensive—limiting most cutting-edge progress to well-funded commercial labs.

Now AI2 wants to kill two birds with one stone. On Tuesday, it announced a new challenge called RoboTHOR (THOR for The House Of inteRactions—yes, really). It will double as a way to crowdsource better navigation algorithms and lower the financial barriers for researchers who may not have robotics resources of their own.

The ultimate goal is to more rapidly advance AI by getting more research groups involved. Different communities should bring different perspectives and use cases that will expand the repertoire of robot capabilities, driving the field closer to more generalizable intelligence.

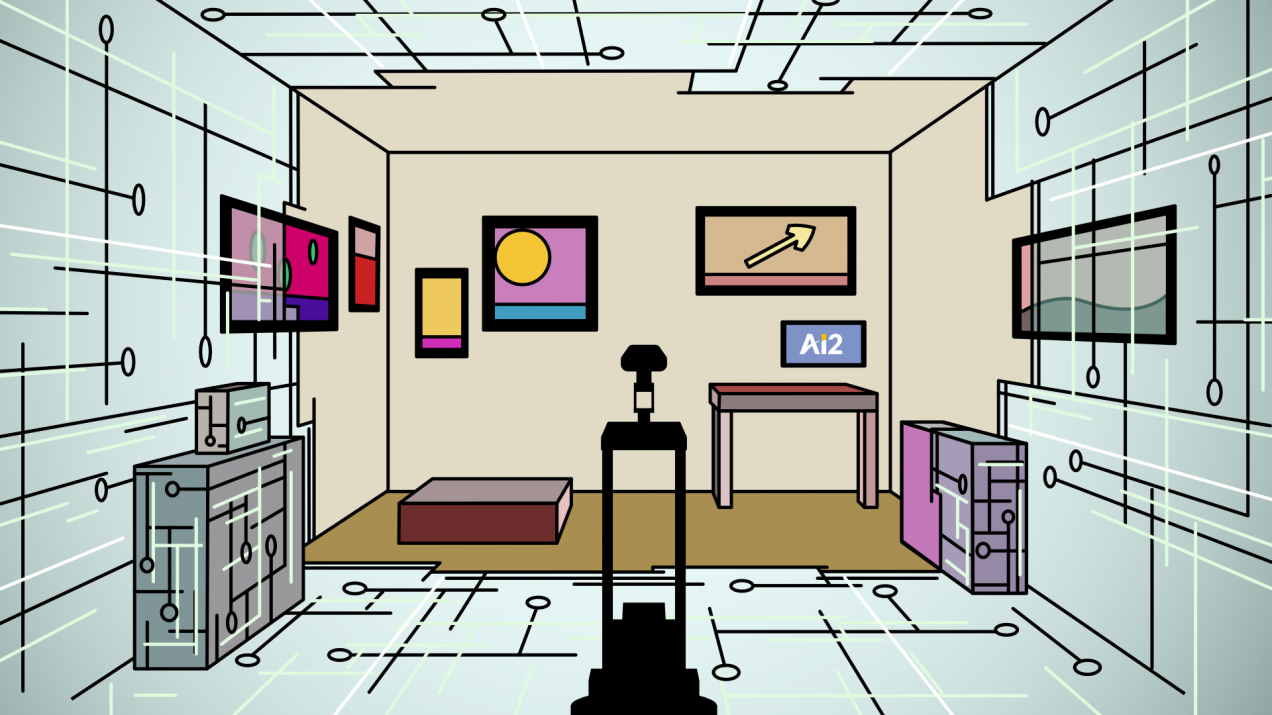

The lab has designed an easily reconfigurable room, the size of a cramped studio, to be the staging ground for all 14 apartment variations. It has also re-created identical virtual replicas in Unity, a popular video-game engine—as well as 75 other configurations—that have all been open-sourced online. Together, these 89 total configurations will offer realistic simulation environments for teams around the world to train and test their navigation algorithms. The environments also come pre-loaded with models of AI2’s robots and mirror real-world physics like gravity and light reflections as closely as possible.

The challenge specifically asks teams to develop algorithms that can get a robot from a random starting location within a room to an object in that room just by telling it the object’s name. This will be more difficult than simple navigation because it will require the robot to understand the command and recognize the object in its visual field as well.

Teams will compete in three phases. In phase one, they will be given the 75 digital-only simulation environments to train and validate their algorithms. In phase two, the highest performers will then be given four new simulation environments with corresponding physical doppelgangers. The teams will be able to remotely refine their algorithms by loading them into AI2’s real robots.

In the final phase, the highest performers will need to demonstrate the generalizability of their algorithms in the last 10 digital and corresponding physical apartments. Whichever teams perform the best in this final phase will win bragging rights and an invitation to demo their models at the Conference on Computer Vision and Pattern Recognition, a leading AI research conference for vision-based systems.

After the challenge is over, AI2 plans to keep the setup available, giving anyone access to the environment to continue conducting robotics research. Researchers who clear a certain threshold of accuracy in the simulated environments—proving they won’t crash the robots—will be allowed to remotely deploy their algorithms in the physical ones. The room will rotate between the different furniture configurations.

“We are going to maintain this environment, and we are going to maintain these robots,” says Ani Kembhavi, a research scientist at AI2 who is leading the project. His team plans to develop a time-sharing system to allow different researchers to take turns remotely testing their algorithms in the real world.

AI2 hopes the strategy will make robotics research more accessible by eliminating as much of the associated hardware costs as possible. It also hopes that the scheme will inspire other well-funded organizations to open up their resources in similar ways. Additionally, it purposely designed its reconfigurable room with low materials costs and globally available Ikea furniture; the setup cost roughly $10,000. Should other researchers want to build their own physical training spaces, they can easily replicate it locally and still match the virtual simulation environments.

Kembhavi, whose dad is an astronomer, likens the idea to the global sharing of telescopes. “Communities like astronomy have figured out how to take expensive resources and make it available to researchers all around the world,” he says.

“That's our vision for this environment,” he adds. “Embodied AI for all.”