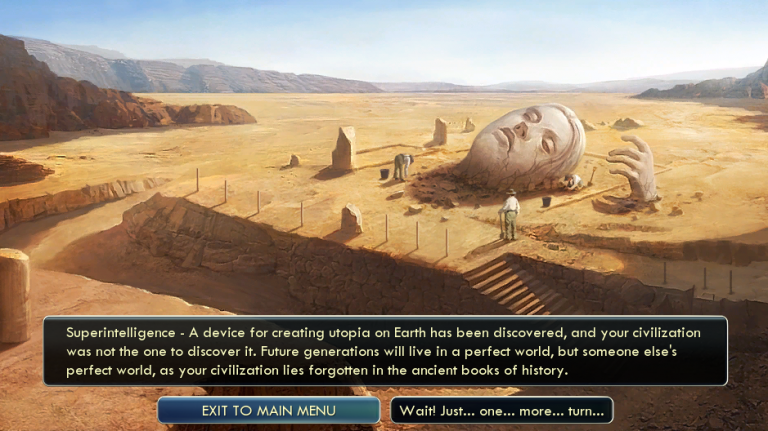

Researchers at the University of Cambridge built a game mod for Civilization that allows people to grow—and tame—a human-beating superintelligence.

The game: Shahar Avin from the university’s Centre for the Study of Existential Risk says the game allows players to build AI R&D capacity in their virtual world. Success is a smarter-than-human AI. Simultaneously, rogue AI risk builds and is mitigated only through safety research.

The goal: “We wanted to test our understanding of the concepts relating to existential risk from artificial superintelligence by deploying them in a restricted yet rich world simulator, [and also test how] players respond … when given the opportunity” to make AI decisions themselves, Avin told me.

Fear or acceptance: The project is also an outreach tool, taking “serious issues to a wider audience.” Avin is “aware” that it could could perpetuate fears about AI. He argues, though, that players “see the risk, but also see the range of tools at their disposal to mitigate it.”