Neural networks can answer a question about a photo and point to the evidence for their answer by annotating the image.

How it works: To test the Pointing and Justification Explanation (PJ-X) model, researchers gathered data sets made up of pairs of photographs showing similar scenes, like different types of lunches. Then they came up with a question that has distinct answers for each photo (“Is this a healthy meal?”).

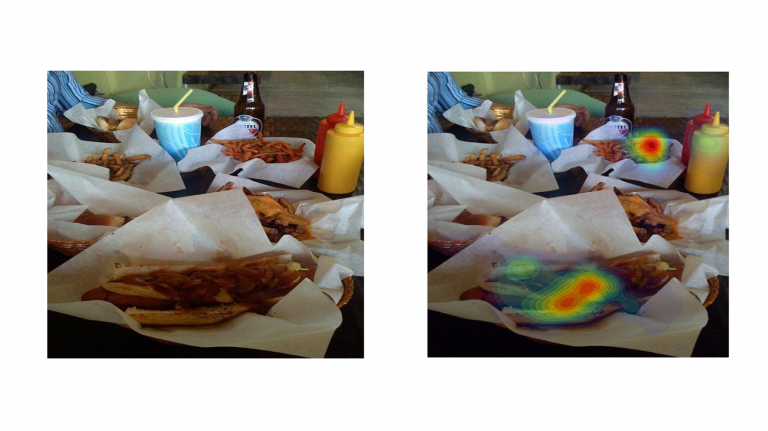

What it does: After being trained on enough data, PJ-X could both answer the question using text (“No, it’s a hot dog with lots of toppings”’) and put a heat map over the photo to highlight the reasons behind the answer (the hot dog and its many toppings).

Why it matters: Typical AIs are black boxes—good at identifying things, but with algorithmic logic that is opaque to humans. For a lot of AI uses, however—a system that diagnoses disease, for instance—understanding how the technology came to its decision could be critical.