Computing

Serious quantum computers are finally here. What are we going to do with them?

Hello, quantum world.

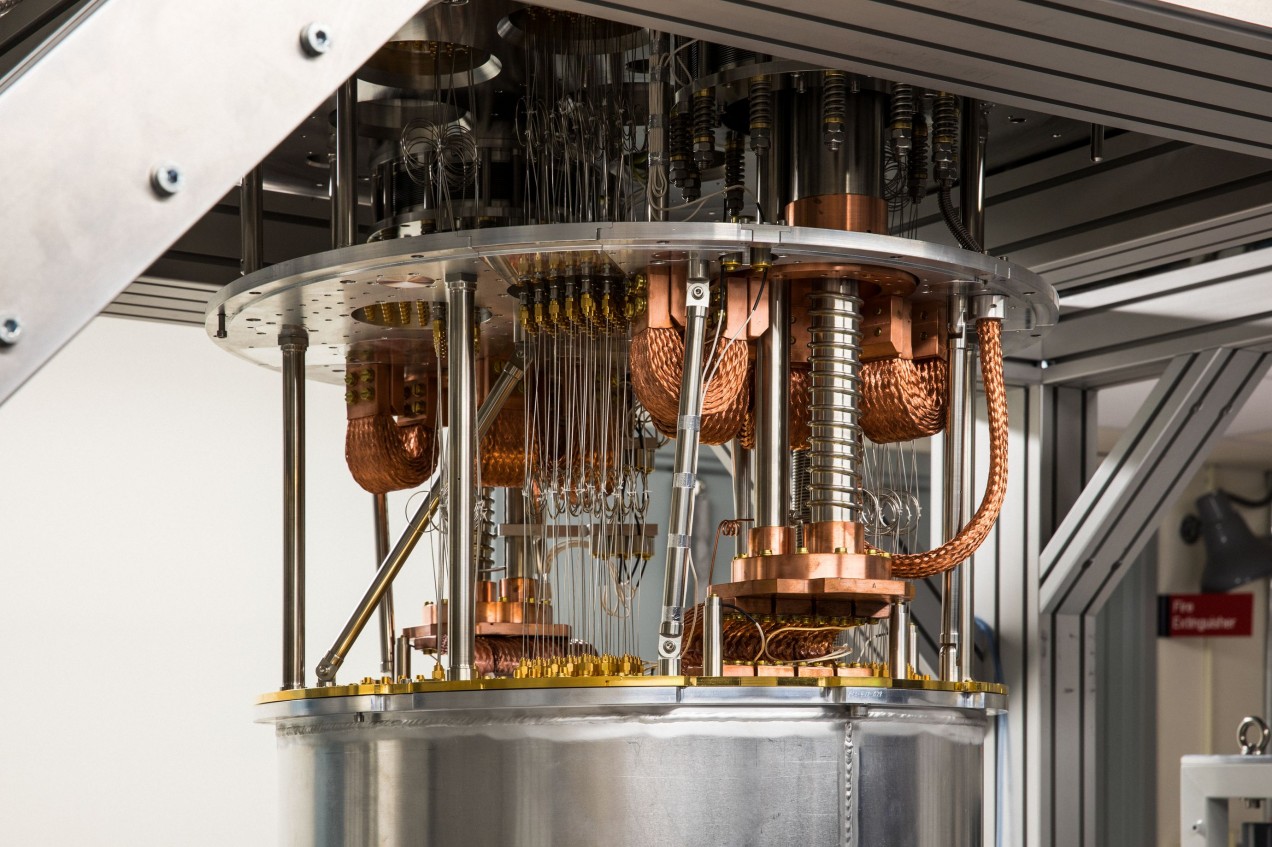

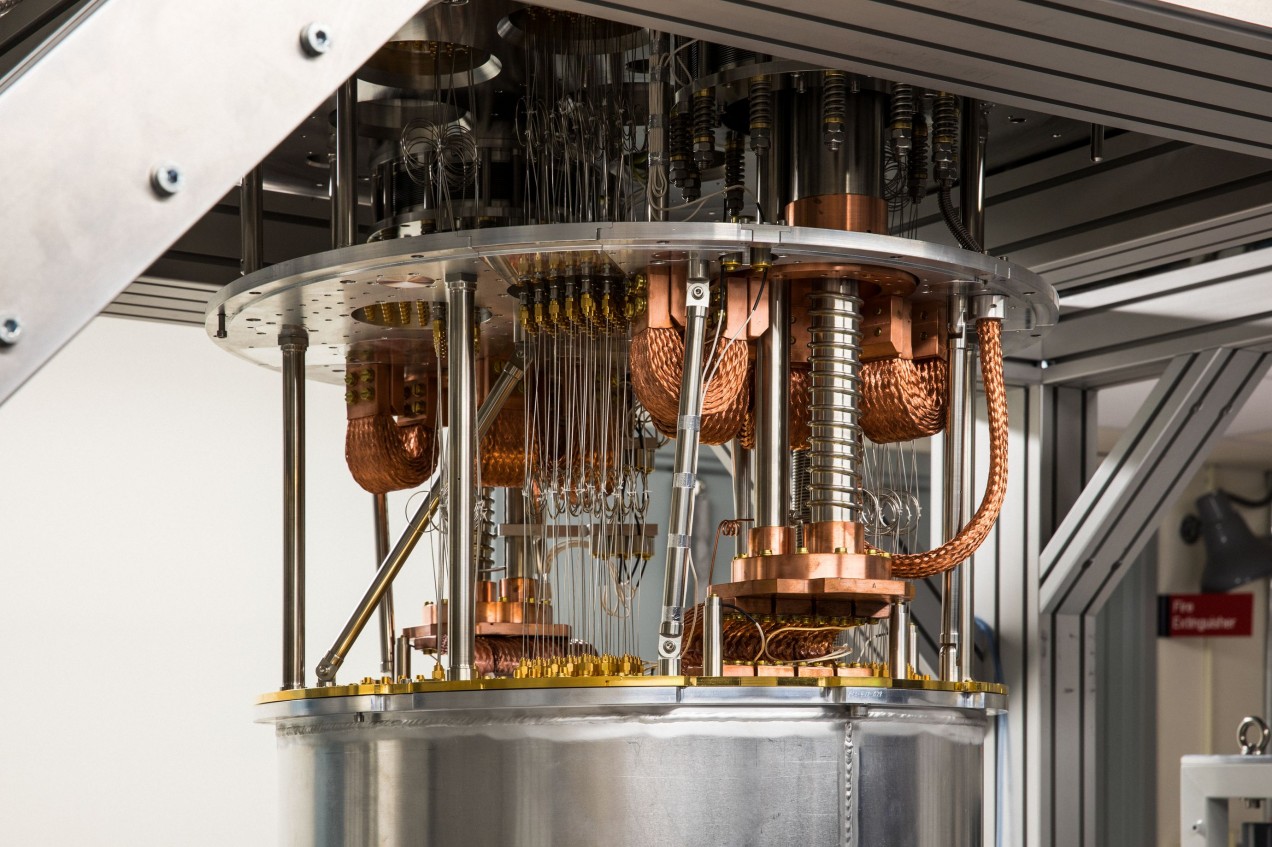

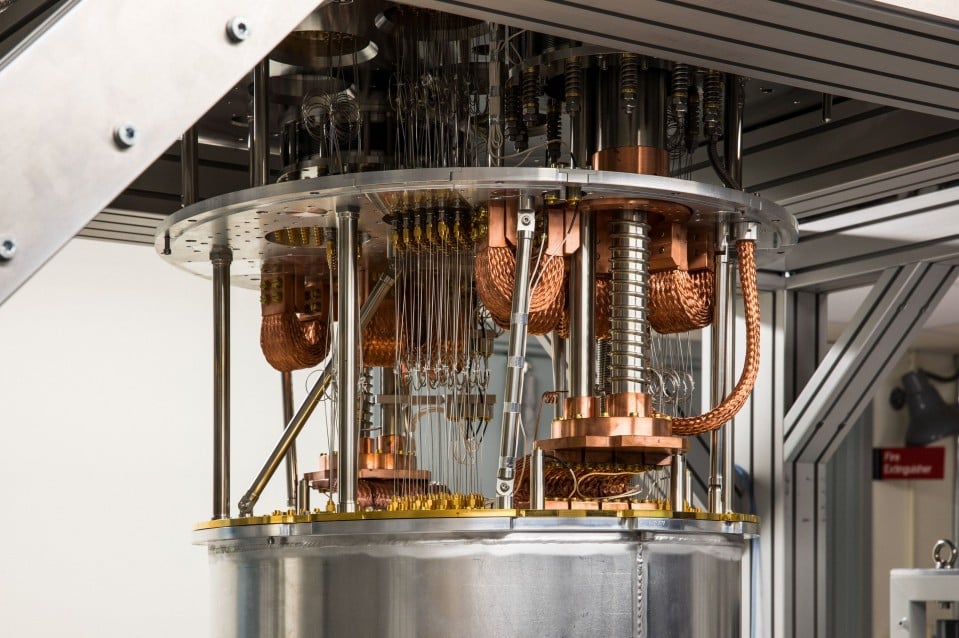

Inside a small laboratory in lush countryside about 50 miles north of New York City, an elaborate tangle of tubes and electronics dangles from the ceiling. This mess of equipment is a computer. Not just any computer, but one on the verge of passing what may, perhaps, go down as one of the most important milestones in the history of the field.

Quantum computers promise to run calculations far beyond the reach of any conventional supercomputer. They might revolutionize the discovery of new materials by making it possible to simulate the behavior of matter down to the atomic level. Or they could upend cryptography and security by cracking otherwise invincible codes. There is even hope they will supercharge artificial intelligence by crunching through data more efficiently.

Yet only now, after decades of gradual progress, are researchers finally close to building quantum computers powerful enough to do things that conventional computers cannot. It’s a landmark somewhat theatrically dubbed “quantum supremacy.” Google has been leading the charge toward this milestone, while Intel and Microsoft also have significant quantum efforts. And then there are well-funded startups including Rigetti Computing, IonQ, and Quantum Circuits.

No other contender can match IBM’s pedigree in this area, though. Starting 50 years ago, the company produced advances in materials science that laid the foundations for the computer revolution. Which is why, last October, I found myself at IBM’s Thomas J. Watson Research Center to try to answer these questions: What, if anything, will a quantum computer be good for? And can a practical, reliable one even be built?

Why we think we need a quantum computer

The research center, located in Yorktown Heights, looks a bit like a flying saucer as imagined in 1961. It was designed by the neo-futurist architect Eero Saarinen and built during IBM’s heyday as a maker of large mainframe business machines. IBM was the world’s largest computer company, and within a decade of the research center’s construction it had become the world’s fifth-largest company of any kind, just behind Ford and General Electric.

While the hallways of the building look out onto the countryside, the design is such that none of the offices inside have any windows. It was in one of these cloistered rooms that I met Charles Bennett. Now in his 70s, he has large white sideburns, wears black socks with sandals, and even sports a pocket protector with pens in it. Surrounded by old computer monitors, chemistry models, and, curiously, a small disco ball, he recalled the birth of quantum computing as if it were yesterday.

When Bennett joined IBM in 1972, quantum physics was already half a century old, but computing still relied on classical physics and the mathematical theory of information that Claude Shannon had developed at MIT in the 1950s. It was Shannon who defined the quantity of information in terms of the number of “bits” (a term he popularized but did not coin) required to store it. Those bits, the 0s and 1s of binary code, are the basis of all conventional computing.

A year after arriving at Yorktown Heights, Bennett helped lay the foundation for a quantum information theory that would challenge all that. It relies on exploiting the peculiar behavior of objects at the atomic scale. At that size, a particle can exist “superposed” in many states (e.g., many different positions) at once. Two particles can also exhibit “entanglement,” so that changing the state of one may instantaneously affect the other.

Bennett and others realized that some kinds of computations that are exponentially time consuming, or even impossible, could be efficiently performed with the help of quantum phenomena. A quantum computer would store information in quantum bits, or qubits. Qubits can exist in superpositions of 1 and 0, and entanglement and a trick called interference can be used to find the solution to a computation over an exponentially large number of states. It’s annoyingly hard to compare quantum and classical computers, but roughly speaking, a quantum computer with just a few hundred qubits would be able to perform more calculations simultaneously than there are atoms in the known universe.

In the summer of 1981, IBM and MIT organized a landmark event called the First Conference on the Physics of Computation. It took place at Endicott House, a French-style mansion not far from the MIT campus.

In a photo that Bennett took during the conference, several of the most influential figures from the history of computing and quantum physics can be seen on the lawn, including Konrad Zuse, who developed the first programmable computer, and Richard Feynman, an important contributor to quantum theory. Feynman gave the conference’s keynote speech, in which he raised the idea of computing using quantum effects. “The biggest boost quantum information theory got was from Feynman,” Bennett told me. “He said, ‘Nature is quantum, goddamn it! So if we want to simulate it, we need a quantum computer.’”

IBM’s quantum computer—one of the most promising in existence—is located just down the hall from Bennett’s office. The machine is designed to create and manipulate the essential element in a quantum computer: the qubits that store information.

The gap between the dream and the reality

The IBM machine exploits quantum phenomena that occur in superconducting materials. For instance, sometimes current will flow clockwise and counterclockwise at the same time. IBM’s computer uses superconducting circuits in which two distinct electromagnetic energy states make up a qubit.

The superconducting approach has key advantages. The hardware can be made using well-established manufacturing methods, and a conventional computer can be used to control the system. The qubits in a superconducting circuit are also easier to manipulate and less delicate than individual photons or ions.

Inside IBM’s quantum lab, engineers are working on a version of the computer with 50 qubits. You can run a simulation of a simple quantum computer on a normal computer, but at around 50 qubits it becomes nearly impossible. That means IBM is theoretically approaching the point where a quantum computer can solve problems a classical computer cannot: in other words, quantum supremacy.

But as IBM’s researchers will tell you, quantum supremacy is an elusive concept. You would need all 50 qubits to work perfectly, when in reality quantum computers are beset by errors that need to be corrected for. It is also devilishly difficult to maintain qubits for any length of time; they tend to “decohere,” or lose their delicate quantum nature, much as a smoke ring breaks up at the slightest air current. And the more qubits, the harder both challenges become.

“If you had 50 or 100 qubits and they really worked well enough, and were fully error-corrected—you could do unfathomable calculations that can’t be replicated on any classical machine, now or ever,” says Robert Schoelkopf, a Yale professor and founder of a company called Quantum Circuits. “The flip side to quantum computing is that there are exponential ways for it to go wrong.”

Another reason for caution is that it isn’t obvious how useful even a perfectly functioning quantum computer would be. It doesn’t simply speed up any task you throw at it; in fact, for many calculations, it would actually be slower than classical machines. Only a handful of algorithms have so far been devised where a quantum computer would clearly have an edge. And even for those, that edge might be short-lived. The most famous quantum algorithm, developed by Peter Shor at MIT, is for finding the prime factors of an integer. Many common cryptographic schemes rely on the fact that this is hard for a conventional computer to do. But cryptography could adapt, creating new kinds of codes that don’t rely on factorization.

This is why, even as they near the 50-qubit milestone, IBM’s own researchers are keen to dispel the hype around it. At a table in the hallway that looks out onto the lush lawn outside, I encountered Jay Gambetta, a tall, easygoing Australian who researches quantum algorithms and potential applications for IBM’s hardware. “We’re at this unique stage,” he said, choosing his words with care. “We have this device that is more complicated than you can simulate on a classical computer, but it’s not yet controllable to the precision that you could do the algorithms you know how to do.”

What gives the IBMers hope is that even an imperfect quantum computer might still be a useful one.

Gambetta and other researchers have zeroed in on an application that Feynman envisioned back in 1981. Chemical reactions and the properties of materials are determined by the interactions between atoms and molecules. Those interactions are governed by quantum phenomena. A quantum computer can—at least in theory—model those in a way a conventional one cannot.

Last year, Gambetta and colleagues at IBM used a seven-qubit machine to simulate the precise structure of beryllium hydride. At just three atoms, it is the most complex molecule ever modeled with a quantum system. Ultimately, researchers might use quantum computers to design more efficient solar cells, more effective drugs, or catalysts that turn sunlight into clean fuels.

Those goals are a long way off. But, Gambetta says, it may be possible to get valuable results from an error-prone quantum machine paired with a classical computer.

From a physicist’s dream to an engineer’s nightmare

“The thing driving the hype is the realization that quantum computing is actually real,” says Isaac Chuang, a lean, soft-spoken MIT professor. “It is no longer a physicist’s dream—it is an engineer’s nightmare.”

Chuang led the development of some of the earliest quantum computers, working at IBM in Almaden, California, during the late 1990s and early 2000s. Though he is no longer working on them, he thinks we are at the beginning of something very big—that quantum computing will eventually even play a role in artificial intelligence.

But he also suspects that the revolution will not really begin until a new generation of students and hackers get to play with practical machines. Quantum computers require not just different programming languages but a fundamentally different way of thinking about what programming is. As Gambetta puts it: “We don’t really know what the equivalent of ‘Hello, world’ is on a quantum computer.”

We are beginning to find out. In 2016 IBM connected a small quantum computer to the cloud. Using a programming tool kit called QISKit, you can run simple programs on it; thousands of people, from academic researchers to schoolkids, have built QISKit programs that run basic quantum algorithms. Now Google and other companies are also putting their nascent quantum computers online. You can’t do much with them, but at least they give people outside the leading labs a taste of what may be coming.

The startup community is also getting excited. A short while after seeing IBM’s quantum computer, I went to the University of Toronto’s business school to sit in on a pitch competition for quantum startups. Teams of entrepreneurs nervously got up and presented their ideas to a group of professors and investors. One company hoped to use quantum computers to model the financial markets. Another planned to have them design new proteins. Yet another wanted to build more advanced AI systems. What went unacknowledged in the room was that each team was proposing a business built on a technology so revolutionary that it barely exists. Few seemed daunted by that fact.

This enthusiasm could sour if the first quantum computers are slow to find a practical use. The best guess from those who truly know the difficulties—people like Bennett and Chuang—is that the first useful machines are still several years away. And that’s assuming the problem of managing and manipulating a large collection of qubits won’t ultimately prove intractable.

Still, the experts hold out hope. When I asked him what the world might be like when my two-year-old son grows up, Chuang, who learned to use computers by playing with microchips, responded with a grin. “Maybe your kid will have a kit for building a quantum computer,” he said.