Tech Policy

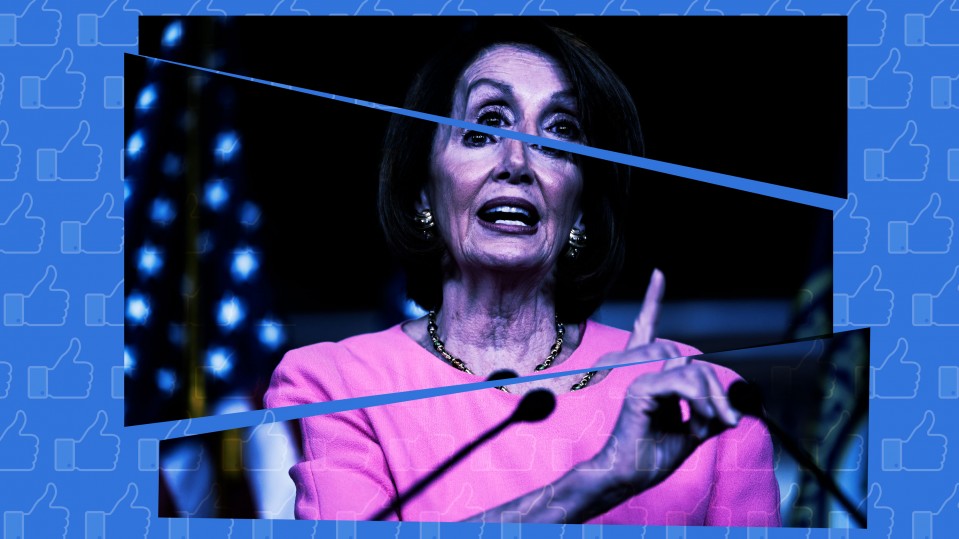

Why Facebook is right not to take down the doctored Pelosi video

Taking down the ‘drunk ’ Pelosi video could set a precedent for censoring political satire or dissent.

Critics are condemning Facebook for not taking down a video of House Speaker Nancy Pelosi that was slowed to make her seem drunk. But some experts argue that removing the video might have set a precedent that would actually cause more harm.

The video makes Pelosi look strange, but it’s not a “deepfake”—it doesn’t falsely show her saying anything she didn’t actually say. It’s an example of what MIT Media Lab researcher Hossein Derakhshan calls “malinformation,” or basically true information that has been subtly manipulated to harm someone.

And while Derakhshan thinks it might have been okay to take down a faked video that showed Pelosi making a racial slur, for example, he argues that it’s too much to expect platforms to police malinformation. “Usually when people do these manipulations, I don’t think it’s illegal,” he says. “So there’s no ground for removing them or requesting them to be taken off these platforms.”

It’s easy to imagine creating a rule like “Take down false examples of hate speech,” but far harder to come up with a rule that requires taking down the Pelosi video but not other forms of mockery, satire, or dissent.

Political mockery, after all, often uses information out of context and comes with intent to harm. An overly broad rule could backfire in authoritarian countries, according to Alexios Mantzarlis, a fellow at TED studying misinformation, who previously helped launch Facebook’s partnerships with fact-checkers.

“My position here is that that this does not seem to cause someone real-life harm, and that once we start taking things down, the precedent might be slippery, especially [for] politicians,” he says. It’s not hard to imagine Facebook being pressured into taking down a video making fun of someone like Brazil’s Jair Bolsonaro, or Donald Trump.

That doesn’t mean that there’s never a reason to take down videos (Mantzarlis says he’s not a free speech absolutist)— just that there are nuances when it comes to the types of manipulation, the potential for harm, and what platforms should do.

In any case, “leave it up” or “take it down” are not the only options. The platform did flag the video with warnings from fact-checking organizations, but Mantzarlis tweeted four other ways Facebook could have taken action more effectively.

4 thoughts on what Facebook could have done on the Pelosi video that fall short of taking it down but might still help in the future.

— Alexios (@Mantzarlis) May 26, 2019

(My takedown-hot take is available at room temperature here: https://t.co/iZaPHiuhn6)

For instance, the the video could have been flagged more quickly, or more prominently—unless you try to share the video, you see only a subtle warning that its authenticity is in doubt. Facebook could also, Mantzarlis, suggests, invite users who shared the video to unshare or unlike, and then share the data with researchers trying to understand what works in fighting the spread of manipulated media.

“This is the largest real-life experiment in combating digital misinformation in history,” he says, “and so the wealth of knowledge that this could generate and provide to researchers and other practitioners fighting misinformation would be really extraordinary.”

Derakhshan agrees that it’s important to label videos that have been fabricated in some way, but he suggests that the best solution is nurturing media literacy. As we deal more and more with images, it’s important to teach people to be skeptical of videos, in the same way it’s now common to wonder if an image has been Photoshopped. “Everybody should always think about the possibility of facing fabricated video,” he says.