Computing / Microchips

Bill Gates just backed a chip startup that uses light to turbocharge AI

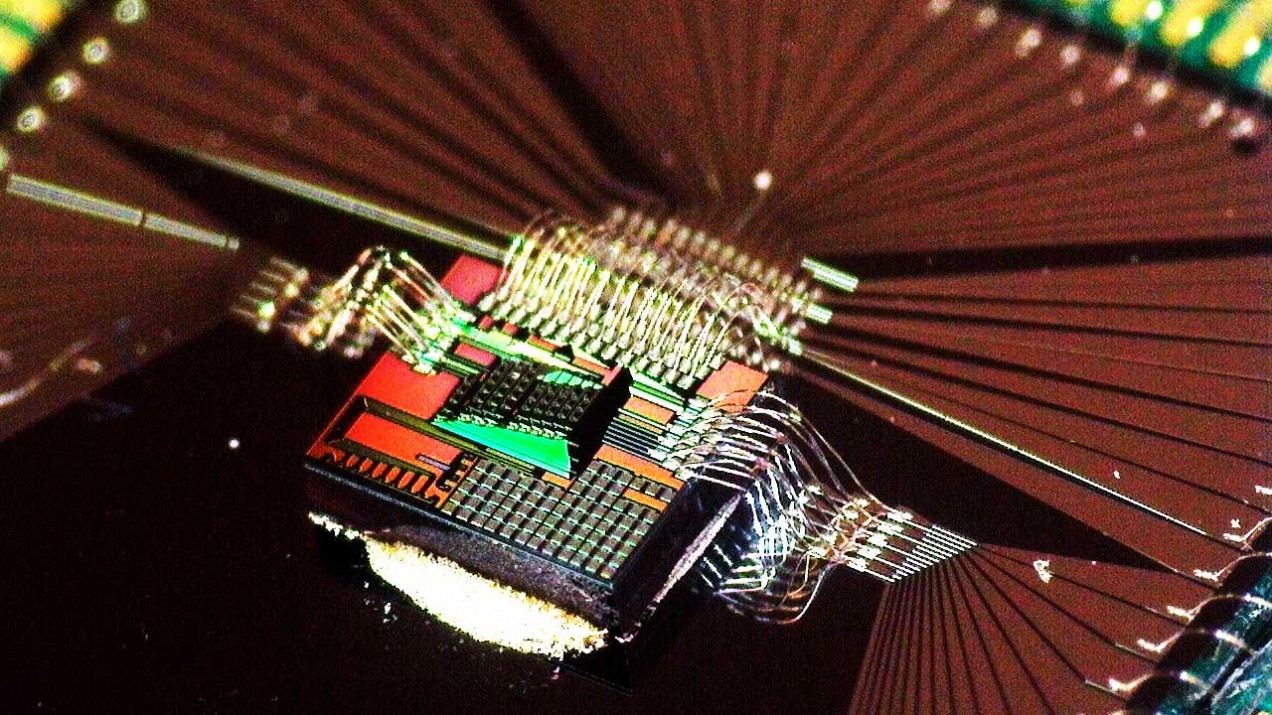

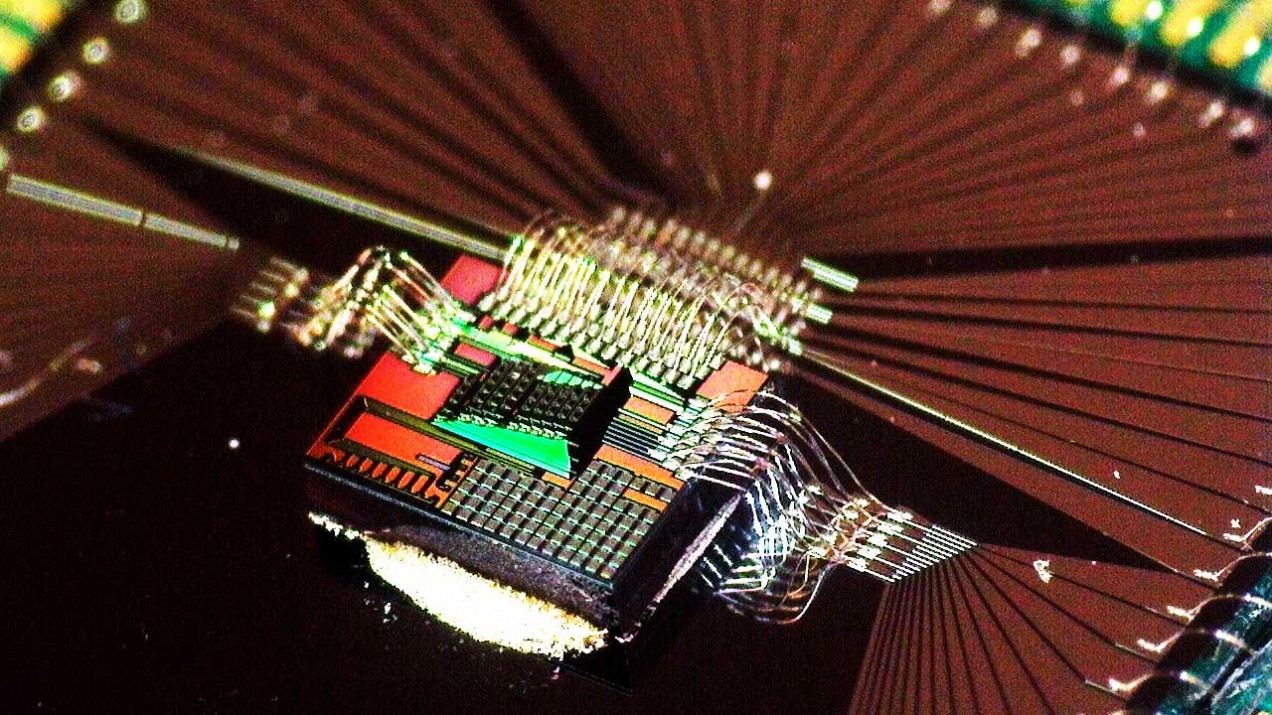

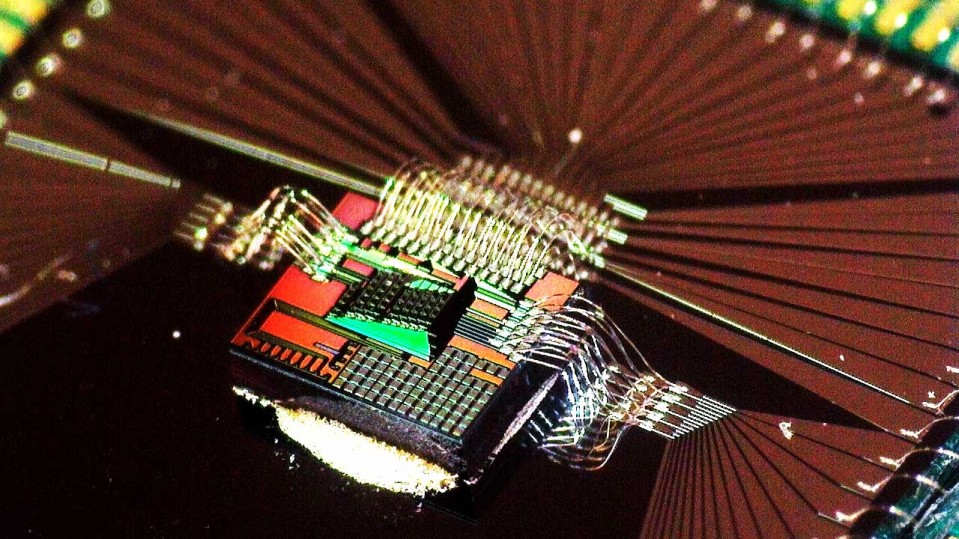

Luminous Computing has developed an optical microchip that runs AI models much faster than other semiconductors while using less power.

Advances in computing, from speedier processors to cheaper data storage, helped ignite the new AI era. Now demand for even faster, more energy-efficient AI models is driving a wave of innovation in semiconductors.

Luminous Computing, which recently raised $9 million of seed funding from prominent investors including Bill Gates and Uber CEO Dara Khosrowshahi, has an ambitious plan to accelerate AI with a new chip. While conventional semiconductors use electrons to help carry out the demanding mathematical calculations that power AI models, Luminous is using light instead.

Many industries are trying to pack an increasing amount of AI into their machines, including makers of autonomous cars and drones. But widely used electrical chips like central processing units aren’t ideal for those tasks because they use a lot of power and may not be able to process data fast enough.

These limitations can cause lags and delays—annoying if you’re waiting for some machine-learning results for a research paper, but far more serious if you’re relying on an AI algorithm to guide a car down a busy street.

The bottleneck is getting worse: a study by research institute OpenAI says the amount of computing power needed to train the largest AI models is doubling every three and a half months.

Luminous’s CEO and cofounder, Marcus Gomez, notes that in spite of all of the hype around AI, the limitations of the underlying hardware are frustrating progress. “Silicon Valley promised us this AI-driven Star Trek reality years ago,” he says, “but we’re still waiting for it to arrive.” More powerful AI chips could boost everything from machine-learning models that assist doctors with medical diagnoses to new kinds of AI-driven apps that can run on a smartphone.

Optical solution

Luminous sees light as the answer. It uses lasers to beam light through tiny structures on its chip, known as waveguides. By using different colors of light to move multiple pieces of data through waveguides at the same time, it can outstrip the data-carrying capabilities of conventional electrical chips.

The ability to transport very large amounts of information swiftly means optical processors are ideally suited to handling the vast number of computations that drive AI models. They also require far less power than electrical ones.

Mitchell Nahmias, another cofounder of Luminous and its chief technology officer, says its current prototype is three orders of magnitude more energy efficient than other state-of-the-art AI chips. The startup’s processor, an early prototype of which is pictured at the top of this story, is based on years of research conducted by Nahmias and other academics at Princeton University.

Still, Luminous faces stiff competition. Startups like Lightelligence and Lightmatter—spot the branding theme here—are also working on optical chips to accelerate AI. And semiconductor behemoths like Intel are stepping up research in the field, which could lead to them launching new optical processors.

Dirk Englund, an MIT professor who’s also a technical advisor to Lightmatter, thinks Luminous may find it challenging to manage the various devices required to manipulate light when it starts to ramp up production of its chips. Optical chips need everything from lasers to electro-optic modulators for controlling light to make them work, which is a big reason they haven’t yet caught on widely.

AI breakthroughs

Gates and other backers are betting that Gomez, Nahmias, and Michael Gao, Luminous’s other cofounder, can overcome this and other hurdles. They are also betting the companies that break through the computing bottleneck will be the ones to help unleash the true potential of AI.

Ali Partovi of Neo, a venture fund that invested in Luminous’s seed round, points out that even things like voice assistants on smartphones are still frustratingly prone to glitches because the devices lack enough AI computing power. “Just imagine a world,” says Partovi, “in which Siri really worked well all of the time.”