Intelligent Machines

How We Feel About Robots That Feel

As robots become smart enough to detect our feelings and respond appropriately, they could have something like emotions of their own. But that won’t necessarily make them more like humans.

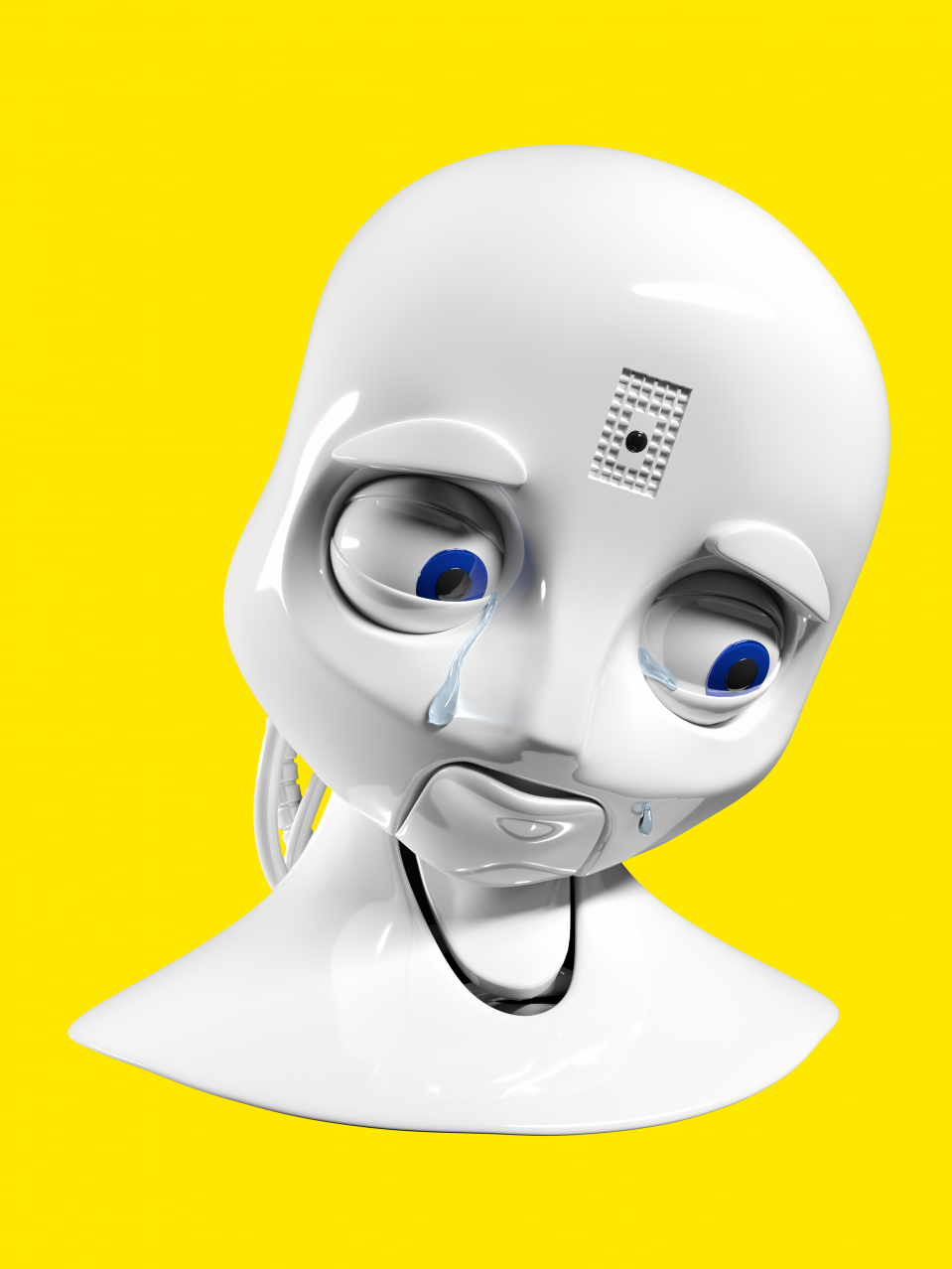

Octavia, a humanoid robot designed to fight fires on Navy ships, has mastered an impressive range of facial expressions.

When she’s turned off, she looks like a human-size doll. She has a smooth white face with a snub nose. Her plastic eyebrows sit evenly on her forehead like two little capsized canoes.

When she’s on, however, her eyelids fly open and she begins to display emotion. She can nod her head in a gesture of understanding; she can widen her eyes and lift both her eyebrows in a convincing semblance of alarm; or she can cock her head to one side and screw up her mouth, replicating human confusion. To comic effect, she can even arch one eyebrow and narrow the opposite eye while tapping her metal fingers together, as though plotting acts of robotic revenge.

But Octavia’s range of facial expressions isn’t her most impressive trait. What’s amazing is that her emotional affect is an accurate response to her interactions with humans. She looks pleased, for instance, when she recognizes one of her teammates. She looks surprised when a teammate gives her a command she wasn’t expecting. She looks confused if someone says something she doesn’t understand.

She can show appropriate emotional affect because she processes massive amounts of information about her environment. She can see, hear, and touch. She takes visual stock of her surroundings using the two cameras built into her eyes and analyzes characteristics like facial features, complexion, and clothing. She can detect people’s voices, using four microphones and a voice-recognition program called Sphinx. She can identify 25 different objects by touch, having learned them by using her fingers to physically manipulate them into various possible positions and shapes. Taken together, these perceptual skills form a part of her “embodied cognitive architecture,” which allows her—according to her creators at the Navy Center for Applied Research in Artificial Intelligence—to “think and act in ways similar to people.”

That’s an exciting claim, but it’s not necessarily shocking. We’re accustomed to the idea of machines acting like people. Automatons created in 18th-century France could dance, keep time, and play the drums, the dulcimer, or the piano. As a kid growing up in the 1980s, I for some reason coveted a doll advertised for the ability to pee in her pants.

We’re even accustomed to the idea of machines thinking in ways that remind us of humans. Many of our long-cherished high-water marks for human cognition—the ability to beat a grandmaster at chess, for example, or to compose a metrically accurate sonnet—have been met and surpassed by computers.

Octavia’s actions, however—the fearful widening of her eyes, the confused furrow of her plastic eyebrows—seem to go a step further. They imply that in addition to thinking the way we think, she’s also feeling human emotions.

That’s not really the case: Octavia’s emotional affect, according to Gregory Trafton, who leads the Intelligent Systems Section at the Navy AI center, is merely meant to demonstrate the kind of thinking she’s doing and make it easier for people to interact with her. But it’s not always possible to draw a line between thinking and feeling. As Trafton acknowledges, “It’s clear that people’s thoughts and emotions are different but impact each other.” And if, as he says, “emotions influence cognition and cognition influences emotion,” Octavia’s ability to think, reason, and perceive points to some of the bigger questions that will accompany the rise of intelligent machines. At what point will machines be smart enough to feel something? And how would we really know?

Octavia is programmed with theory of mind, meaning that she can anticipate the mental states of her human teammates. She understands that people have potentially conflicting beliefs or intentions. When Octavia is given a command that differs from her expectations, she runs simulations to ascertain what the teammate who gave the command might be thinking, and why that person thinks this unexpected goal is valid. She does this by going through her own models of the world but altering them slightly, hoping to find one that leads to the stated goal. When she tilts her head to one side and furrows her eyebrows, it’s to signal that she’s running these simulations, trying to better understand her teammate’s beliefs.

Octavia isn’t programmed with emotional models. Her theory of mind is a cognitive pattern. But it functions a lot like empathy, that most cherished of all human emotions.

Other robot makers skirt the issue of their machines’ emotional intelligence. SoftBank Robotics, for instance, which sells Pepper—a “pleasant and likeable” humanoid robot built to serve as a human companion—claims that Pepper can “perceive human emotion,” adding that “Pepper loves to interact with you, Pepper wants to learn more about your tastes, your habits, and quite simply who you are.” But though Pepper might have the ability to recognize human emotions, and though Pepper might be capable of responding with happy smiles or expressions of sadness, no one’s claiming that Pepper actually feels such emotions.

What would it take for a robot creator to claim that? For one thing, we still don’t know everything that’s involved in feeling emotions.

In recent years, revolutions in psychology and neuroscience have radically redefined the very concept of emotion, making it even more difficult to pin down and describe. According to scientists such as the psychologist Lisa Feldman Barrett, a professor at Northeastern University, it is becoming increasingly clear that our emotions vary widely depending on the culture we’re raised in. They even vary widely within an individual in different situations. In fact, though we share the general feelings that make up what’s known as “affect” (pleasure, displeasure, arousal, and calmness) with most other humans and many other animals, our more acute and specific emotions vary more than they follow particular norms. Fear, for instance, is a culturally agreed-upon concept, but it plays out in our bodies in myriad ways. It’s inspired by different stimuli, it manifests differently in our brains, and it’s expressed in different ways on our faces. There’s no single “fear center” or “fear circuit” in the brain, just as there’s no reliably fearful facial expression: we all process and display our fear in radically different ways, depending on the situation—ways that, through interactions with other people, we learn to identify or label as “fear.”

When we talk about fear, then, we’re talking about a generalized umbrella concept rather than something that comes out of a specific part of the brain. As Barrett puts it, we construct emotions on the spot through an interplay of bodily systems. So how can we expect programmers to accurately model human emotion in robots?

There are moral quandaries, as well, around programming robots to have emotions. These issues are especially well demonstrated by military robots that, like Octavia, are designed to be sent into frightening, painful, or potentially lethal situations in place of less dispensable human teammates.

At a 2017 conference sponsored by the Army’s somewhat alarmingly titled Mad Scientist Initiative, Lieutenant General Kevin Mangum, the deputy commander for Army Training and Doctrine Command, specified that such robots should and will be autonomous. “As we look at our increasingly complex world, there’s no doubt that robotics, autonomous systems, and artificial intelligence will play a role,” said Mangum. The 2017 Army Robotic and Autonomous Systems Strategy predicts full integration of autonomous systems by 2040, replacing today’s bomb-disposal robots and other machines that are remotely operated by humans.

When these robots can act and think on their own, should they, like Octavia, be programmed with the semblance of human emotion? Should they be programmed to actually have human emotion? If we’re sending them into battle, should they not only think but feel alongside their human companions?

On the one hand, of course not: if we’re designing robots with the express aim of exposing them to danger, it would be sadistic to give them the capacity to suffer terror, trauma, or pain.

On the other hand, however, if emotion affects intelligence and vice versa, could we be sure that a robot with no emotion would make a good soldier? What if the lack of emotion leads to stupid decisions, unnecessary risks, or excessively cruel retribution? Might a robot with no emotion decide that the intelligent decision would be to commit what a human soldier would feel was a war crime? Or would a robot with no access to fear or anger make better decisions than a human being would in the same frightening and maddening situation?

And then there’s the possibility that if emotion and intelligence are inextricably linked, there’s no such thing as an intelligent robot with no emotion, in which case the question of how much emotion an autonomous robot should have is, in some ways, out of the control of the programmer dealing with intelligence.

There’s also the question of how these robots might affect their human teammates.

By 2010, the U.S. Army had begun deploying a fleet of about 3,000 small tactical robots, largely in response to the increasing use of improvised explosive devices in warfare. In place of human soldiers, these robots trundle down exposed roads, into dark caves, and through narrow doorways to detect and disable unpredictable IEDs.

This fleet is composed mostly of iRobot’s PackBot and QinetiQ North America’s Talon, robots that aren’t particularly advanced. They look a little like WALL-E, their boxy metal bodies balanced on rubber treads that allow them to do a pretty good job of crossing rocky terrain, climbing stairs, and making their way down dusky hallways. They have jointed arms fitted with video cameras to survey their surroundings, and claws to tinker with explosive devices.

They’re useful tools, but they’re not exactly autonomous. They’re operated remotely, like toy cars, by soldiers holding devices that are sometimes fitted with joysticks. As an example of AI, the PackBot isn’t all that much more advanced than iRobot’s better-known product, the Roomba that vacuums under your armchair.

And yet even now, despite the inexpressive nature of these robots, human soldiers develop bonds with them. Julie Carpenter demonstrates in Culture and Human-Robot Interaction in Militarized Spaces that these relationships are complicated, both rewarding and painful.

When Carpenter asked one serviceman to describe his feelings about a robot that had been destroyed, he responded:

I mean, it wasn’t obviously … anywhere close to being on the same level as, like, you know, a buddy of yours getting wounded or seeing a member getting taken out or something like that. But there was still a certain loss, a sense of loss from something happening to one of your robots.

Another serviceman compared his robot to a pet dog:

I mean, you took care of that thing as well as you did your team members. And you made sure it was cleaned up, and made sure the batteries were always charged. And if you were not using it, it was tucked safely away as best could be because you knew if something happened to the robot, well then, it was your turn, and nobody likes to think that.

Yet another man explained why his teammate gave their robot a human name:

Towards the end of our tour we were spending more time outside the wire sleeping in our trucks than we were inside. We’d sleep inside our trucks outside the wire for a good five to six days out of the week, and it was three men in the truck, you know, one laid across the front seats; the other lays across the turret. And we can’t download sensitive items and leave them outside the truck. Everything has to be locked up, so our TALON was in the center aisle of our truck and our junior guy named it Danielle so he’d have a woman to cuddle with at night.

These men all stress that the robots are tools, not living creatures with feelings. Still, they give their robots human names and tuck them in safely at night. They joke about that impulse, but there’s a slightly disturbing dissonance in the jokes. The servicemen Carpenter interviewed seem to feel somewhat stuck between two feelings: they understand the absurdity of caring for an emotionless robot that is designed to be expendable, but they nevertheless experience the temptation to care, at least a little bit.

Once Carpenter had published her initial interviews, she received more communication from men and women in the military who had developed real bonds with their robots. One former explosive ordnance disposal technician wrote:

As I am an EOD technician of eight years and three deployments, I can tell you that I found your research extremely interesting. I can completely agree with the other techs you interviewed in saying that the robots are tools and as such I will send them into any situation regardless of the possible danger.

However, during a mission in Iraq in 2006, I lost a robot that I had named “Stacy 4” (after my wife who is an EOD tech as well). She was an excellent robot that never gave me any issues, always performing flawlessly. Stacy 4 was completely destroyed and I was only able to recover very small pieces of the chassis. Immediately following the blast that destroyed Stacy 4, I can still remember the feeling of anger, and lots of it. “My beautiful robot was killed …” was actually the statement I made to my team leader. After the mission was complete and I had recovered as much of the robot as I could, I cried at the loss of her. I felt as if I had lost a dear family member. I called my wife that night and told her about it too. I know it sounds dumb but I still hate thinking about it. I know that the robots we use are just machines and I would make the same decisions again, even knowing the outcome.

I value human life. I value the relationships I have with real people. But I can tell you that I sure do miss Stacy 4, she was a good robot.

If these are the kinds of testimonials that can be gathered from soldiers interacting with faceless machines like PackBots and Talons, what would you hear from soldiers deployed with robots like Octavia, who see and hear and touch and can anticipate her human teammates’ states of mind?

In popular conversations about the ethics of giving feelings to robots, we tend to focus on the effects of such technological innovation on the robots themselves. Movies and TV shows from Blade Runner to Westworld attend to the trauma that would be inflicted on feeling robots by humans using them for their entertainment. But there is also the inverse to consider: the trauma inflicted on the humans who bond with robots and then send them to certain deaths.

What complicates all this even further is that if a robot like Octavia ends up feeling human emotions, those feelings won’t only be the result of the cognitive architecture she’s given to start with. If they’re anything like our emotions, they’ll evolve in the context of her relationships with her teammates, her place in the world she inhabits.

If her unique robot life, for instance, is spent getting sent into fires by her human companions, or trundling off alone down desert roads laced with explosive devices, her emotions will be different from those experienced by a more sheltered robot, or a more sheltered human. Regardless of the recognizable emotional expressions she makes, if she spends her life in inhumane situations, her emotions might not be recognizably human.

Louisa Hall, a writer in New York, is the author of Speak, a 2015 novel about artificial intelligence.